ETL Sync

Legacy ETL platform end-of-life

The Open Analytics Hub is now available, allowing you to directly query and export your BigPanda raw data from Snowflake.

As part of BigPanda's commitment to delivering modern, cost-effective, and scalable data solutions, the legacy ETL data platform backed by Redshift will be deprecated and transitioned to the new platform.

See the Open Analytics Hub documentation for more information about accessing your data. Contact your BigPanda account team if you have any questions.

BigPanda maintains raw data in standard objects that can be accessed through the BigPanda ETL pipeline, or can be exported in CSV format and processed through your own external tools.

Both BigPanda Analytics and the ETL Pipeline make data from the platform available in near real-time. Data will be available on average in 1-2 minutes, but during high data-volume periods may take as many as 6-8 minutes to be available.

Raw data export

BigPanda is able to assist with exporting raw data. We recommend working with a data specialist on your team to process the data once it is exported. BigPanda is unable to assist with any raw data processing or sync beyond what is mentioned on this page.

CSV file format

The exported CSV will not have headers and the source data may change at any time. Ensure your ETL process is able to handle changes in the CSV

To connect to the BigPanda ETL Pipeline, your data tools will need to first be configured.

Files can be accessed through the ETL by syncing or copying the raw files into a bucket of your own for your own ETL to transform and store the data.

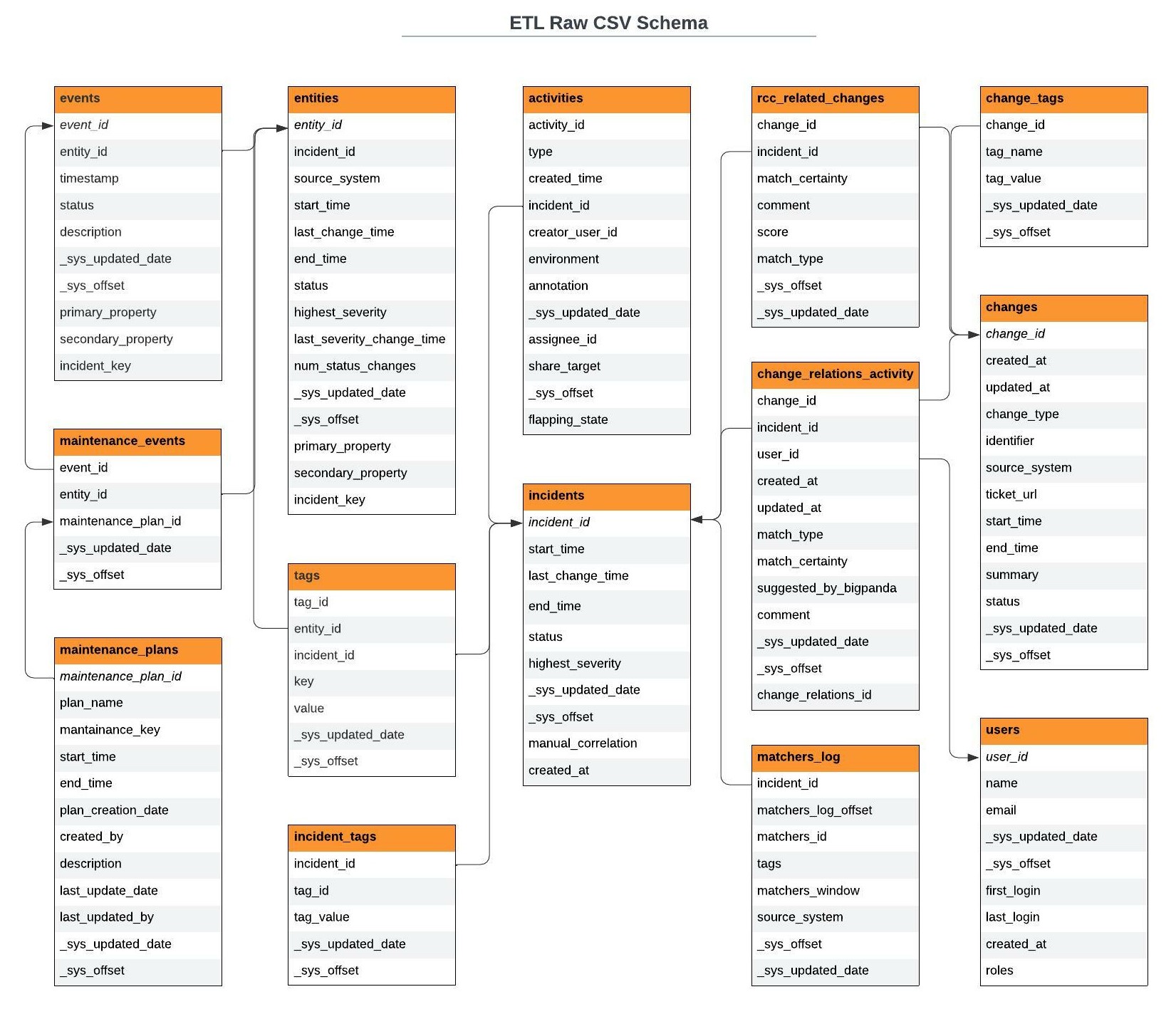

Schema

Each of the objects below are available for ETL sync or can be downloaded in CSV format.

Activities - a single action that a user has been doing

Change Relations Activities - changes that have been marked as matched or not matched to an Incident

Changes - Changes that have been sent to and processed by BigPanda

Change Tags - the tags associated to a single change in BigPanda

Entities - a single aggregated alert in BigPanda (e.g, a row in the BigPanda timeline view)

Events - all status changes for a single raw event in BigPanda

Incidents - a single BP Incident

Incident Tags - the incident tags associated with a single BigPanda incident

Maintenance Events - events that matched to BigPanda maintenance plans

Maintenance Plans - a single BigPanda maintenance plan

Matchers Log - the correlation patterns that matched and applied to a single BigPanda incident

RCC Related Changes - a single change suspected as a potential root cause by the BigPanda algorithm for a single incident

Tags - a single tag of an entity

User - a single user

In the ETL process, 2 additional columns are added to each table with ETL information:

_sys_import_time - the timestamp of the sync

_sys_execution_id - The unique identifier of the sync execution

Primary identifiers

When using the BigPanda ETL, incident_id and tag_id are the primary identifiers

For details on the attributes for each available object, see the Raw Data ETL Schema documentation.

|

ETL Raw CSV Schema

AWS S3-Based Sync

An S3 Sync can be configured to mirror BigPanda BI analytics information in your organization’s S3 bucket.

When the process is enabled in BigPanda, it starts generating the raw data files in CSV format into the S3 bucket on the BigPanda side. Replication privileges then allow the data to be replicated into the S3 bucket for your organization.

AWS S3 Configuration

Contact BigPanda Support and request a product change.

Create an S3 bucket with versioning enabled.

Provide BigPanda Support with the ARN and AWS account ID. BigPanda Support will provide a bucket policy.

Add the bucket policy to your bucket to begin to receive objects through replication.

Click on the bucket.

Select the Permissions tab.

Select Bucket Policy and enter the policy provided by support.

Select the Management tab.

Choose Replication and click More -> Receive objects.

Confirm that the objects are received in your S3 bucket.

Encryption

There is no encryption on the S3 bucket on BigPanda side. When the objects are replicated into the Customer bucket, they assume the destination privileges/permissions as well as encryption requirements.

If you use the default encryption on your S3 bucket, you need to add the following statement to the KMS key used on the bucket:

{

"Sid": "Allow BigPanda to Generate Data Key",

"Action": "kms:GenerateDataKey",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::103749124141:root"

},

"Resource": "*"

}

Next Steps

Review the Raw Data ETL Schema

Dig into additional reporting options with BigPanda Analytics